"Una

revisión rápida de algunos de los protocolos de

encaminamiento

dinámico de tráfico multicast"

El motivo de la utilización de multicast

es bastante obvio, pero a la hora de diseñar una solución de encaminamiento

multicast escalable que se adapte a las necesidades reales

es difícil elegir cuál es la mejor arquitectura de las posibles, por

ello se van a repasar algunas de las opciones.

Métodos multicast

Existen dos modelos de cursar el tráfico

multicast:

- Anycast Source Multicast (ASM): Este es el modelo multicast “tradicional”, en el que se tienen varios orígenes multicast dentro de un mismo grupo multicast.

- Source Specific Multicast (SSM): Por el contrario a ASM, en el nuevo modelo SSM el receptor (receiver) puede seleccionar el origen multicast, mejorando la eficiencia en la red y aumentando la seguridad. Para realizar esta selección, el receptor debe valerse de la última versión IGMP, el protocolo IGMPv3.

Esquemas de enrutamiento multicast ASM

Aquí

se van a discutir algunos de los protocolos utilizados para

solventan la problemática de direccionar tráfico multicast según el

modelo ASM:

- Esquema "Source-tree"

Debido

a que este comportamiento envía el tráfico multicast en todas las direcciones,

es muy posible que los routers reciban el mismo flujo por varias interfaces.

Para solventar esto el router rechaza todo tráfico que no sea recibido por la

interfaz que utilizaría al enviar tráfico unicast al origen del tráfico

multicast.

Dos

protocolos que utilizan esta técnica son:

o Distance Vector

Multicast Routing Protocol (DVMRP)

o Protocol

Independent Multicast Dense-Mode (PIM-DM)

Estos protocolos son similares, con la

salvedad de que el primero (más antiguo) utiliza una tabla de enrutamiento

destinada solo al tráfico multicast, mientras que PIM-DM se vale de la tabla de

enrutamiento unicast.

- Esquema “Shared-tree"

Esta arquitectura

redistribuye la información de enrutamiento mediante la centralización de esta

en uno de los routers de la topología. Esto genera otra serie de puntos de

diseño, como es la redistribución de rutas entre estos routers así como la

prestación de alta disponibilidad del servicio que ofrecen.

Algunos

de los protocolos que se pueden englobar en esta clasificación son:

o Core-Based Tree (CBT)

o Protocol

Independent Multicast Sparce-Mode (PIM-SM)

o Bidirectional Protocol Independent Multicast (Bidir-PIM)

o Bidirectional Protocol Independent Multicast (Bidir-PIM)

Comparativa de protocolos

Shared-tree

Existen diferencias de funcionamiento

entre los protocolos shared-tree mencionados antes:

- Core-Based

Tree (CBT)El router que centraliza las rutas es llamado “Core”. Cuando el origen de datos multicast comienza a transmitir, su router local reenviará dicho tráfico hasta este router Core.Cuando el receiver se une a un grupo multicast el router de su segmento de red direcciona el mensaje de Join hacia el router Core. Ese mismo camino es el que seguirá el flujo de datos Multicast ya que el router Core centralizará no solo las tablas de enrutamiento, si no también el tráfico multicast.Este comportamiento tiene el inconveniente de que es muy posible que, a no ser que el router Core se encuentre muy cerca del origen del tráfico multicast, las rutas entre el origen y el receiver no sean las óptimas, como se puede ver en este ejemplo encontrado en http://www.cl.cam.ac.uk/~jac22/books/mm/book/node78.html

Ilustración 1 – Rutas no optimas con

CBT

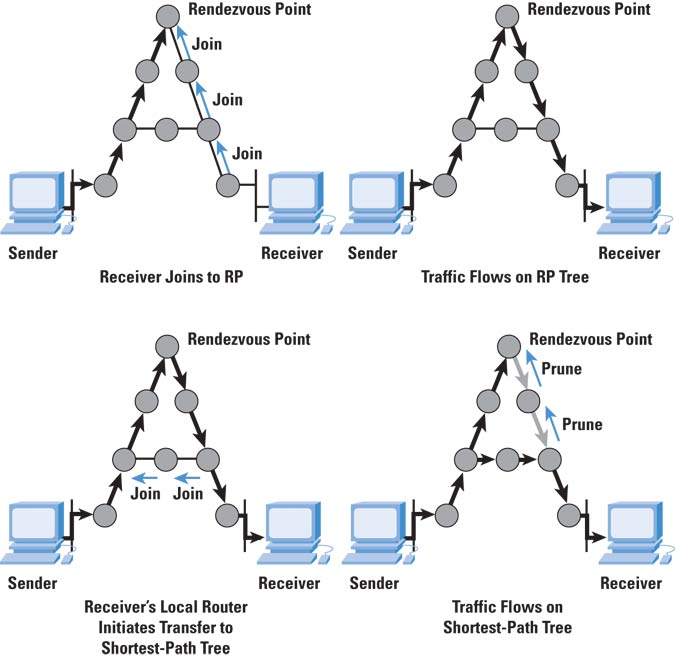

- Protocol Independent Multicast Sparce-Mode (PIM-SM)

Este

protocolo solventa el problema de que el tráfico multicast esté obligado a

transcurrir por el router Core (en PIM-SM se denomina Rendezvous

Point (RP) ), de tal manera que si la

ruta que pasa a través del RP no es la optima no será la utilizada.

Los RP utilizan el modelo source-tree para llegar a los orígenes multicast pero, cada router cliente genera lo que se llaman unidirectional trees gracias a los mensajes de Join de los receivers. Estos caminos son utilizados en el momento en que se detecta que son mejores que el utilizado por el RP.

Los RP utilizan el modelo source-tree para llegar a los orígenes multicast pero, cada router cliente genera lo que se llaman unidirectional trees gracias a los mensajes de Join de los receivers. Estos caminos son utilizados en el momento en que se detecta que son mejores que el utilizado por el RP.

Dicho

procedimiento viene bien reflejado en el siguiente esquema que aparece en http://www.cisco.com/web/about/ac123/ac147/ac174/ac198/about_cisco_ipj_archive_article09186a00800c851e.html

Ilustración 2 –

Elección del camino óptimo en PIM-SM

En

la configuración del RP se pueden optar por dos opciones, configurar los RP

manualmente en todos los routers o utilizar alguno de los métodos de auto descubrimiento:

Auto-RP y BSR, siendo el primero de ellos el que más versatilidad proporciona, ya que en el es posible la modificación de parámetros (como los temporizadores) y puede ser utilizado en el modo híbrido también llamado "PIM-sparse-dense mode".

Al ser el RP un punto crítico en la arquitectura PIM-SM, es necesario proporcionar algún método de redundancia. La alta disponibilidad del RP se consigue utilizando más de un RP al mismo tiempo (Anycast-RP) y gestionar su utilización mediante el protocolo Multicast Source Discovery Protocol (MSDP). Este protocolo permite que los RP compartan información sobre el estado de los orígenes multicast.

Al ser el RP un punto crítico en la arquitectura PIM-SM, es necesario proporcionar algún método de redundancia. La alta disponibilidad del RP se consigue utilizando más de un RP al mismo tiempo (Anycast-RP) y gestionar su utilización mediante el protocolo Multicast Source Discovery Protocol (MSDP). Este protocolo permite que los RP compartan información sobre el estado de los orígenes multicast.

MSDP también es utilizado cuando, por causas de escalabilidad, o por la existencia de varios dominios de gestión (por ejemplo varios ISPs), se hace necesaria la creación de múltiples "dominios" o "zonas" multicast, los cuales pueden ser regidos por diferentes RPs. Estos dominios necesitan el protocolo MSDP para poder comunicarse los orígenes que residen en cada uno de ellos respectivamente.

- Bidirectional Protocol Independent Multicast (Bidir-PIM)

El protocolo Bidir-PIM está basado en PIM-SM pero

con algunas diferencias. PIM-SM utiliza el RP (Rendez-Vous Point) para

gestionar el envío de datos a cada uno de los grupos multicast mediante el

shared-tree desde los RP hasta los receptores (recivers o clientes) y

source-tree desde el origen hasta el RP. Esto puede llegar a ser un problema si

se tiene un alto número de orígenes, ya que puede llegar a sobrecargar la

máquina RP.

En el protocolo Bidirectional PIM no existe el

source-tree entre el origen y el RP, si no que esta comunicación se realiza

mediante un shared-tree lo que lo hace un protocolo muy escalable en cuanto al

número de orígenes multicast se refiere.

La mayor escalabilidad se gana a costa de que todo

el tráfico se dirige hacia el RP. Esto hace que exista posibilidad de conmutar

a una ruta obtenida por source-tree (como sucedía en PIM-SM).

Debido a que este protocolo solo utiliza

shared-trees necesita un método adicional para enviar el tráfico hasta el RP.

El método consiste en disponer un nuevo rol, el Designated Forwarder (DF), el cual decide los paquetes que tienen

que ser enviados hacia el RP.

Este protocolo tiene el nombre de bidireccional ya

que tiene la peculiaridad de que las redes de los orígenes de tráfico también

pueden ser receptores, lo que puede ser necesario en algún diseño en el que

existan aplicaciones que requieran muchos orígenes y muchos destinos simultáneamente.

Este comportamiento bidireccional conlleva que el protocolo Reverse Path Forwarding (RPF) no puede ser utilizado conjuntamente con este protocolo, ya que se utiliza un mismo shared-tree para llevar el tráfico desde el origen al RP como el tráfico desde el RP a los recivers.

En la siguiente imagen se muestran las diferencias entre Source-tree, Shared-tree y Bidir-PIM. En el último caso existen dos orígenes (S1 y S2) y se observa como se crea un camino bidireccional:

Ilustración 3 – Diferencias entre Source-tree, Shared-tree y Bidir-PIM

Al igual que en PIM-SM, en Bidir-PIM el RP también es un punto crítico (incluso más en este último caso) que aporta un servicio que debe ser redundado. En este caso no se puede utilizar la misma metodología que con PIM-SM, ya que no pueden utilizarse varios RP a la vez, como se hacía con Anycast-RP, debido a que no utiliza un source-tree para la comunicación con el origen y todo el tráfico fluirá por el RP. En el caso de Bidir-PIM se hace uso del llamado Phantom-RP, un procedimiento parecido al VRRP o HSRP en el que se crea una "instancia virtual del RP".

Esquemas de enrutamiento multicast SSM

El protocolo que se basa en el esquema SSM es el llamado Protocol Independent Multicast-Source-Specific Multicast mode (PIM-SSM) el cual hace uso de la lógica SSM mediante la cual un grupo multicast ya no está solo definido por un grupo de receptores, si no que también lo está por el origen al que quieren vincularse.

Este protocolo está pensado para arquitecturas uno-a-muchos y puede verse como una modificación del protocolo PIM-SM, aunque tiene una gran diferencia, y es que PIM-SSM no hace uso de los RPs, ya que se conoce el origen multicast de antemano (al haber sido elegido explícitamente por el receptor), siendo el camino elegido mediante el protocolo de direccionamiento unicast.